Over the last few decades, since the advent of personal computing in the home, the devices most commonly used in the modern world have slipped into somewhat of a rut in recent times. The workloads these machines deal with rarely change, and this has given engineers the power to tailor them to specific jobs. Your computer, smartphone, and other digital tools are great for opening emails and browsing the web. When it comes to tasks with greater complexity or needs which are more specific, though, even the most powerful of workstations will quickly begin to falter.

There is a widespread misconception when it comes to the way that computers operate. Raising clock speeds, pushing higher voltages, and adding new parts isn’t a sure way to improve performance, even if it will improve benchmarking results. Instead, to give a machine a fighting chance with the most demanding workloads, engineers have to start with the data being processed on their route to building a system which can deal with it.

A great real-world example of this sort of problem can be found when you look into the recent history of cryptocurrency mining. Originally, a lot of miners started in this field using regular off-the-shelf parts, all of which can be found in normal computers. These machines were never built for such demanding and complex workloads, though, forcing those using them to look towards hardware acceleration in the form of graphics cards.

Unlike a normal CPU which is designed to handle a very diverse range of tasks, a GPU excels when it comes to bulk computation, having been designed to handle quickly refreshing data to be displayed on a monitor. This approach was so popular for a time that is has caused a worldwide shortage of components like this. Still, though, they aren’t quite good enough at mining to compete with the strongest of machines. ASICs, or application-specific integrated circuits, are machines which have been developed for a single task. Loads of examples have hit the scene in the world of mining, but this approach only works when there is high demand.

What about workloads which don’t have a huge customer base? Medical research, low-latency packet processing, and high-frequency trading are all examples of fields which can’t rely on convention computers or servers to get the job done. Instead, the users themselves have to find ways to power their work. Of course, though, most organizations don’t have the resources to start the process of research and development for tools like this.

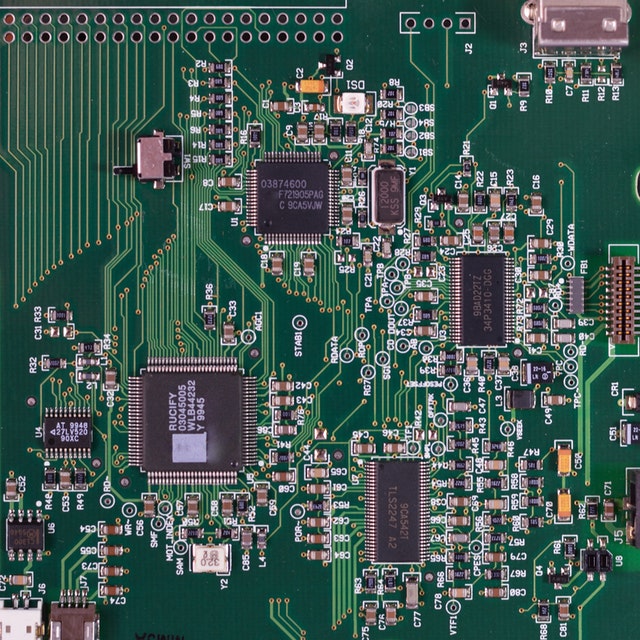

Instead, this is where options like FPGA boards come in handy. They come in loads of shapes and sizes, but will share two common attributes; modularity and freedom. Unlike a conventional machine, FPGA boards consist of an array of logic blocks, all of which can be individually programmed to perform their own tasks. As possibly their biggest selling point, though, they are never restricted to one job. Their range of applications is vast, giving engineers the power to solve problems and adapt their resources with small changes to the code, rather than having to invest in systems which cost a small fortune for each job.

With modern computers being great at handling a diverse range of jobs, it can be very easy to assume that there isn’t a need for some systems to be a little more specific. In reality, though, striking the balance between processing power and the data you have to work with is a much bigger challenge when it is brought to a commercial scale. Some computers are a jack of all trades, while others excel in a single field.

Be First to Comment